Vatican, Microsoft and IBM Want Regulations on AI and Face Recognition

Representatives of Microsoft and IBM signed Pope Francis' document on the ethical development of artificial intelligence. The companies have undertaken to promote the development of technology with respect for human rights, with particular emphasis on the face recognition system.

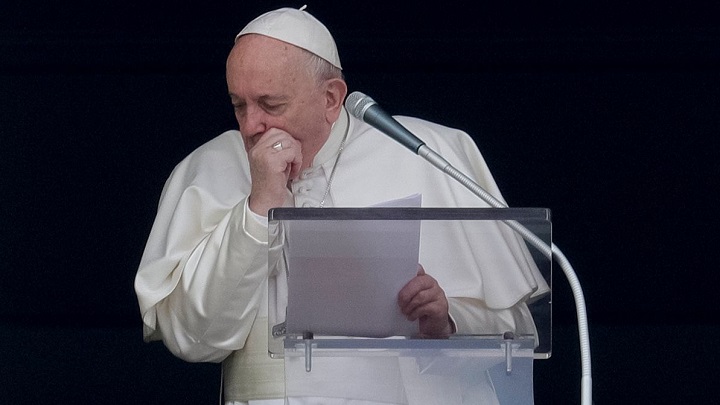

What do Microsoft, IBM and Pope Francis have in common? Fears about the development of AI. Last friday, a document called Rome Call for AI Ethics was signed, which, as the name suggests, calls for ethical regulation of artificial intelligence. The document was signed by one of Microsoft's CEO, Brad Smith, IBM's Executive Vice President John Kelly and, on behalf of the Pope, who was absent from the Vatican Conference for health reasons, Archbishop and President of the Pontifical Academy of Life, Vincenzo Paglia.

In a text produced with the support of both corporations, Pope Francis calls for "ethical development of algorithms" or, in short, "algor-ethics". They are to be created "with the whole of humanity in mind", thus respecting the human rights and privacy of individuals. In addition, the principles of artificial intelligence should be understandable to all, reliable and impartial. The Pope also warns against the risks associated with the development of the AI, the greatest of which concerns the use of technology by the privileged few at cost of the majority. This is what Vincenzo Paglia said in Vatican:

"This asymmetry, by which a select few know everything about us while we know nothing about them, dulls critical thought and the conscious exercise of freedom."

Similar declarations were made by representatives of Microsoft and IBM, and not only at the Vatican conference. Even before signing the text of Rome Call for AI Ethics Satya Nadella, the second president of the Redmond giant, called for the regulation of Artificial Intelligence, recently at the Indian edition of the Future Decoded conference (via Hindustan Times) last week, when he spoke about the need to ensure that the AI is not contaminated by "unconscious prejudices" (for example, on a racial or cultural background). Perhaps that is why the document signed on Friday paid special attention to facial recognition systems as an example of potentially risky technology. It is possible that this was the main reason for this unusual cooperation (as Brad Smith himself admits).

This is not the first sign of concern about the applications of AI. Since January, European Commission has been considering this issue, although the text of the so-called White Paper has only been known for two weeks. It also mentions the facial recognition technology. It was underlined that already in the light of the current legislation on personal data protection (i.a. GDPR), the collection and use of such information is prohibited, unless in exceptional cases. The debate in the public forum, which will last until May 19, will help to precisely define these case.

0

Author: Jacob Blazewicz

Graduated with a master's degree in Polish Studies from the University of Warsaw with a thesis dedicated to this very subject. Started his adventure with gamepressure.com in 2015, writing in the Newsroom and later also in the film and technology sections (also contributed to the Encyclopedia). Interested in video games (and not only video games) for years. He began with platform games and, to this day, remains a big fan of them (including Metroidvania). Also shows interest in card games (including paper), fighting games, soulslikes, and basically everything about games as such. Marvels at pixelated characters from games dating back to the time of the Game Boy (if not older).

Latest News

- End of remote work and 60 hours a week. Demo of Naughty Dog's new game was born amid a crunch atmosphere

- She's the new Lara Croft, but she still lives in fear. Trauma after Perfect Dark changed the actress' approach to the industry

- „A lot has become lost in translation.” Swen Vincke suggests that the scandal surrounding Divinity is a big misunderstanding

- Stuck in development limbo for years, ARK 2 is now planned for 2028

- Few people know about it, but it's an RPG mixing Dark Souls and NieR that has received excellent reviews on Steam, and its first DLC will be released soon